Heatwave Prediction

Overview

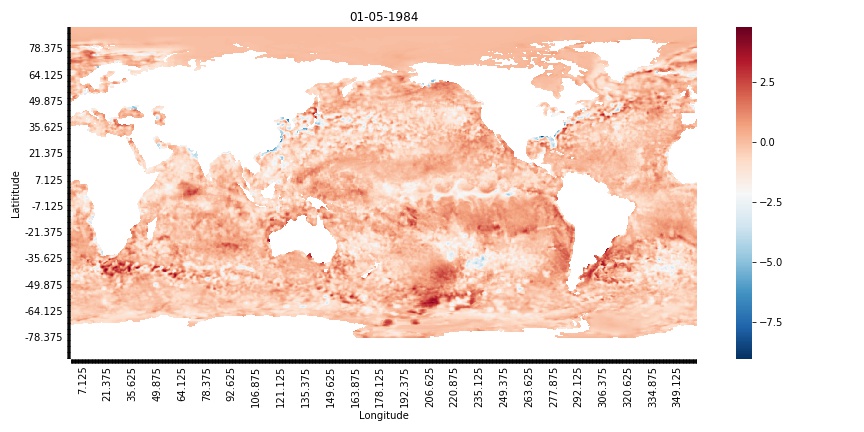

The purpose of this project is to develop a forecasting model for heatwave events for the intended use of informing agricultural planning and community preparation. The data that will be used for developing this model is Sea Surface Temperature (SST) data that has been collated by the NOAA. SST data is a valuable factor in climate modeling and weather forecasting and has had demonstrated success in similar models[1]. In this project, I plan to apply neural networks to this data in an effort to predict heatwave events in the Eastern US.

To develop the model, you must first understand the data. SST data is spatiotemporal in structure. But what does this really mean?

Spatiotemporal Data

First, it means there is a spatial component to the data. In other words, the relative position of the data points plays a key role, much like pixels in, say, the picture of a dog. When the pixels are arranged properly, we can easily identify the dog in the picture. But when the location is randomized, it becomes a jumbled mess! For our purposes, the relative latitudes and longitudes of the SST data are imperative to proper modeling.

Second, it means there is a temporal component to the data. This means that the order in which the data is processed plays a key role. The sentence "the dog likes music" syntactically makes sense, while the sentence "likes music dog the" suddenly loses all its meaning. Like the order of the sentence, the SST data must be processed in order of its time.

So how do neural networks process this type of data?

Modeling

Let's again start by focusing on spatial data. Convolutional Neural Netowrks (CNNs) have become the industry standard for handling such data. They were developed to be similar to the way that humans process images or, more technically, spatial data. Biologically, neurons respond to stimuli in only a small piece of the overall visual field at a time. A collection of such responses then overlap to form our entire visual field[2]. CNNs work much the same way. A filter is passed over small chunks of the image identifying if certain features, like an edge or a corner, are present. The following animation illustrates the concept of these filters. Higher level filters may later be passed over these results to "stitch" together the collection of edges and corners to perhaps recognize an eye or nose of a face. If 2 eyes, a nose, and a mouth are discovered, the CNN may then be capable of recognizing a face in the image. For the purpose of this project, the goal is for the CNN to identify SST patterns that correspond with higher air temperatures in the US.

Now for processing temporal data, Long Short Term Memory (LSTM) models have become the standard. They are a particular architecture that belongs to the Recurrent Neural Network family, which consists of models whose outputs are then fed into the input of the next "step". I put "step" in quotes here to emphasize that it may refer to the next timestep, but it also may refer to the next word in a sentence, or the next number in a sequence. If the order of the data is imperative to the problem, LSTMs tend to be a good choice.

This model, too, took inspiration from human and biological processes. The core concept is that the model maintains a "memory" from the previous timesteps that is adjusted and updated each pass according to a collection of gates[3]. These gates are Densely Connected Neural Networks (this is important!). With each pass, pieces of "memory" may be forgotten and new pieces may be added before moving on to the next step. If I'm deciding what shirt I want to wear today, I may want to consider all of the shirts I've worn in the past week, but I may not want to consider what I wore 3 months ago. For an illustration of how data flows through this model, check out the following animation!

While, separately, CNNs and LSTMs are great at doing their jobs, we need a model that combines the two. Remember how I said the Densely Connected Neural Networks within the LSTM were important? Let's just switch them out for CNNs[4]! This concept was developed and aptly named the "ConvLSTM". It is the same LSTM cell animated above, but the gates are now convolutional. This allows it to identify spatiotemporal patterns. In the paper referenced below, the authors go on to demonstrate the success of this architecture in forecasting precipation patterns (another spatiotemporal problem!). In this project, I will be optimizing this model to suit the needs of SST data and ternary forecasts (Above average, average, below average). For future updates on the project, feel free to refer back to this post!

References

- Long-lead predictions of eastern United States hot days from Pacific sea surface temperatures. McKinnon, et al. 2016

- A Comprehensive Guide to Convolutional Neural Networks. Sumit Saha

- Illustrated Guide to LSTMs. Michael Nguyen

- Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. Shi et al. 2015